What types of data are we analyzing? How much data do we have? What type of database should we use? What platform should we use? What kind of insights are we looking for? How quickly do we need the insights? Does it have to be real-time? These are just a few of the questions that organizations need to address when approaching near real-time analytics. In this blog series, we explore the critical role of real-time analytics and show you how to put it into practice within your organization.

Before we explore practical steps to harness the value of real-time analytics, let’s unpack the working definition. Analytics is defined as information resulting from the systematic analysis of data or statistics to enable businesses to make informed decisions. As an extension of this definition, near real-time analytics are actionable insights done (close to) on-the-go. For example, credit card processing companies needing to identify fraudulent transactions or fraud trends over time can apply near real-time analytics to gain insight and inform decision making.

In part one of this blog series, we cover a few of the initial steps that analytics leaders should take to prime their organizations for real-time analytics.

Get a grasp on your data

To get started, let’s dig into data a bit and understand what data is needed immediately versus what is best aggregated. Let’s take the same example of a credit card processing company. There are millions of credit card transactions happening every minute, and the company needs to make a call on whether it needs aggregation at the level of granularity (grain) of an account for certain period i.e. daily, monthly, quarterly and yearly, etc. Ultimately, the approach should be aligned to the business requirement at hand. For example, if the credit card processing company’s goal is to understand total spend on travel, then the grain of data should be on the merchant category so it can be aggregated to calculate total travel spend over a period of time for a given merchant. However, if the business goal is to aid customers with a seamless application that enables them to verify fraudulent transactions, then the data does not need to be aggregated and should be processed on an immediate basis.

Processing data in real-time can be challenging/costly/computationally intensive, so it’s important to make sure the fields that are needed in real-time are used for that purpose, but the others are not.

Make a call on processing

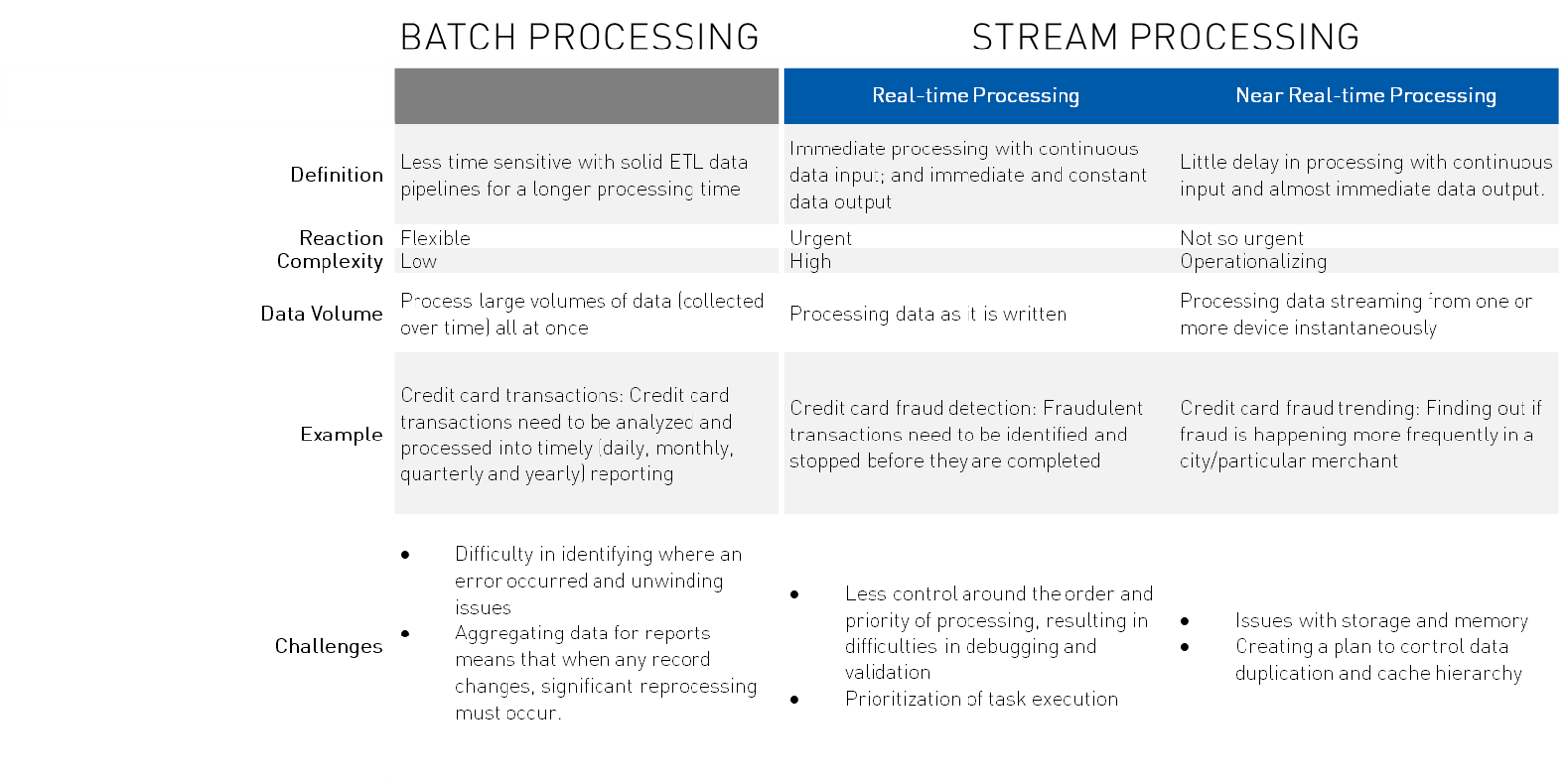

Once we have a grasp on the data, it’s important to figure out how the data will be processed. There are generally two ways to approach this depending on the intended purpose: batch processing versus stream processing. If we are dealing with historical and archived data, where time isn’t too crucial, we should lean towards batch processing with traditional ETL data pipelines. When answers need to be delivered within seconds to be of value, we should lean towards stream (real-time or near real-time) processing.

Putting these elements into practice, each data processing method has its pros and cons. The best choice ultimately depends on the use case at hand and how you are looking to use your data. For example, the credit card processing company may wish to implement a monetizing strategy around detecting fraud transaction in real-time. If the company has a skilled IT workforce with infrastructure budget, near real-time processing would be the best bet.

Learn about the tools to facilitate smarter data processing in part two of our blog series.